September 2025 - In this interview, Ingo Friese (IF) of Deutsche Telekom’s (DT) T-Lab examines the topic of Agentic AI at the intersection with IoT. He describes early experiments with the application of agents in IoT systems and some of the issues that the technical community will need to solve to enable operational, scalable, and trustworthy systems.

Q: Let us begin by talking about your background and role in DT.

IF: I am an engineer and software architect with a 25-year background in telecommunications industry. I love to write code. I especially like the "beauty" of Microservices, an approach that enables me to build massively distributed and complex applications.

I have been a product owner working with a developer team in T-Labs which is DT’s arm for Research & Innovation activities. We get involved a range of projects. One such project that we discussed some years back is the EU funded Horizon 2020 project called is MySMARTLife. It falls into the smart cities category and involved the cities of Nantes, Hamburg, and Helsinki. The aim of the project was to make these cities more environmentally friendly by reducing CO2 emissions and increasing the use of renewable energy sources. We worked closely with Hamburg on our portion of the project and contributed to the closing knowledge sharing workshop that took place in Hamburg in 2022.

I remain involved in IoT standardization via oneM2M and looking at new developments, such as AI, that are complementary to IoT systems.

Q: You are leading an effort on Agentic AI in oneM2M. What is the background to that initiative?

IF: No doubt you have heard that Agentic AI has become a big buzz term in the technology sector. In simple terms, it is a way to build on large language models (LLMs) and complete a defined task. This requires the addition of memory and the ability for a software agent to interact with external functions. You can expand this idea to groups of task-specific agents. There might be an agent that summarizes a research paper and another that synthesizes results from a search engine to generate a summary report.

These ideas bring us to the concept of an agentic web which is where a combination of several agents completes a more complex task. For example, a student working in our T-Labs was recently looking to rent garage space to store his motorbike. He set this as a problem for a Google Agent service. It provided him with a list of landlords who rent out garage spaces in his search area.

Q: How do these ideas carry over into the IoT arena?

IF: The whole area around AI agents is evolving and at quite a rapid pace. Interactions with external components such as connected devices, sensors, and data repositories among others bring AI face to face with the IoT. To get to this future, I see two hurdles to overcome. One is a way of allowing agents to communicate with one another. If you are booking a holiday, for example, there might be an agent for flights, another for local taxis, a third for accommodation and so on. Each agent might need to communicate with one another and with an orchestrator agent. Google’s agent to agent (A2A) protocol seems to address this need.

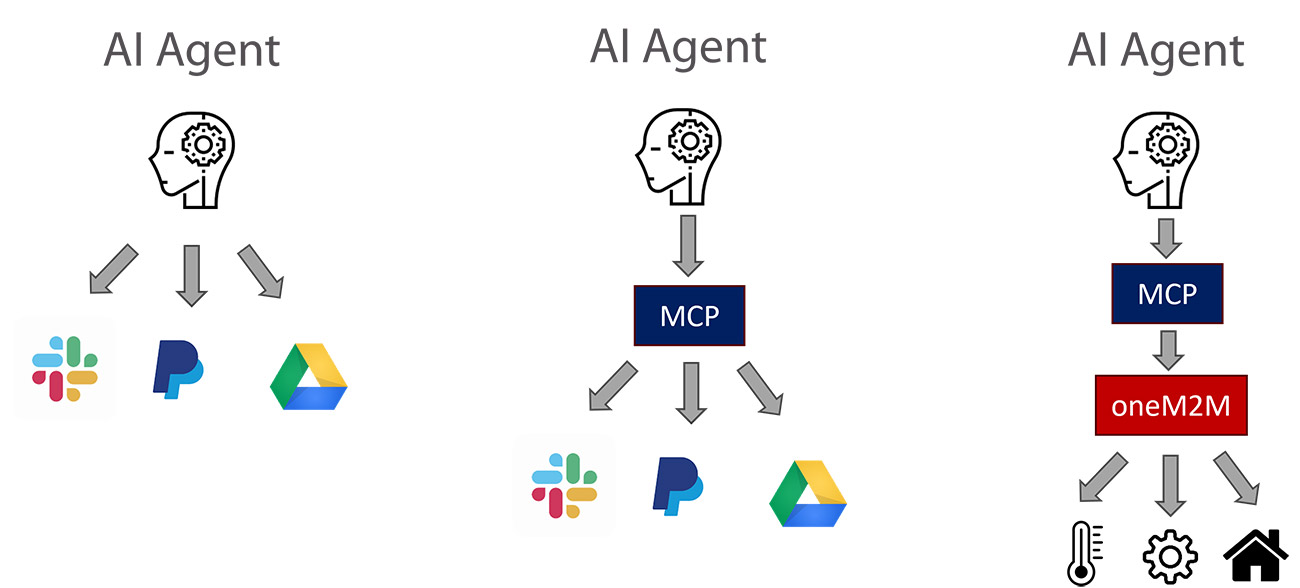

Then, the second hurdle is to allow agents to interact with non-AI entities. I am experimenting with smart home components and an example interaction involves an AI agent requesting environmental data from a home weather station. Somehow, the (AI) agent needs additional functionality and API access to interact with the (non-AI) station. Model Context Protocol (MCP) has emerged as a technology to address this challenge. This shows you where Agentic AI overlaps with IoT.

Q: What are your plans for Agentic AI in oneM2M?

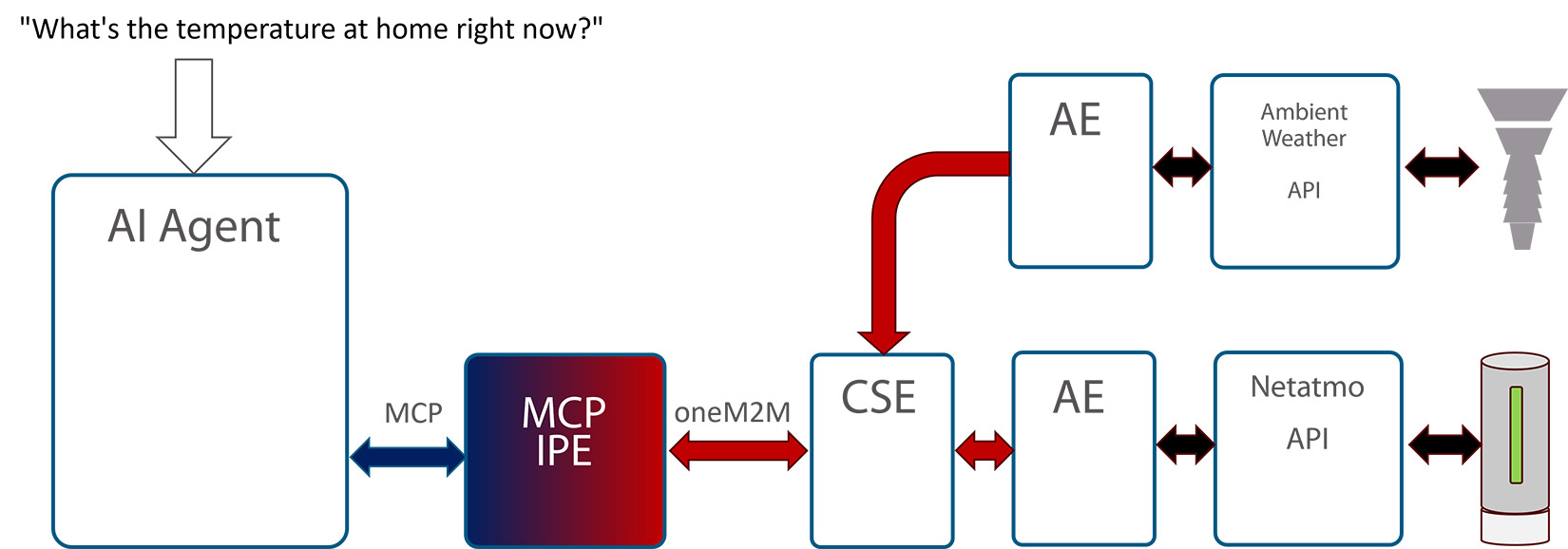

IF: I am currently working on a Technical Report that will eventually lead to standardization in oneM2M’s roadmap. To begin with, I focused on definitions and early experimentation. Given my interest in smart home concepts, I built a simple AI Agent system. As illustrated, the basic building blocks are a conversational AI agent that accepts a user instruction to fetch data from a Netamo weather station that measures temperature, humidity, atmospheric pressure, and air quality. I can also request outside weather information from the Ambient Weather service.

The AI and IoT domains are connected via an interworking proxy entity (IPE) and a common services entity (CSE). The IPE provides a mapping between MCP and oneM2M primitives. The CSE in this case is the open-source, ACME CSE; it performs the role that is commonly associated with an IoT platform. The experiment has gone well so far with the basic MCP to oneM2M mapping being relatively easy to implement. I have learned a lot from the experiment, including requirements for a more robust and fully functioning system. These will be items to study in the oneM2M work item.

Q: Would you elaborate on the new requirements?

IF: There are several groups of requirements to consider. Let us begin with authentication and authorization functions that are present in all systems. These are key building blocks for security. They help developers to implement access control policies to subsystems and to IoT data. Think of the case where a system manager wants to allow some users access to all data, while applying narrower access rights for another set of users. How will this scenario play out when one or more agents want to access data? Might there be a response protocol that sends policy permissions information back to an Agent or an overlay protocol that adds conditions to MCP requests?

Another topic for consideration is the role of oneM2M’s notifications function. Putting this into context, an IoT entity can subscribe to receive notifications from a CSE when threshold conditions are met in another IoT entity. A CSE, for example, would recognize a change in status (e.g., “temperature exceeds threshold X”) and trigger a notification message to a monitoring application. While we are accustomed to AI Agents sending information requests, it is less clear what happens when an Agent receives a notification.

Protocols to manage authentication, authorization, and notifications are examples of the topics that will define a better and more functional IPE. More so than the basic IPE I created for my experimental work. More rigorous functionality will be needed for operationally dependable Agentic IoT systems.

The concept of notifications brings us to the topic of time series events and data. What happens when an agent requests the current humidity level as well as corresponding data from one month and one year ago? A response is possible if historical data is accessible from the CSE. We also need to consider the possibility that the Agent might invent a response if the historical data is not available. I have observed this kind of behaviour in my coding experiments, with the LLM apologizing for ignoring instructions and fabricating responses.

Q: What makes the Agentic AI topic important to Deutsche Telekom (DT)?

IF: The issue is not just important for DT but to all telecommunications service providers. That is because AI and agents will be part of the solution mix to automate parts of our network management activities. DT views the use of agentic AI on networks as an accelerator on the journey towards autonomous networks. For example, DT has developed a multi-agent system called RAN Guardian that analyzes network behavior in real time to detect performance issues. It then recommends or, in some cases, autonomously implements actions to resolve them. After a successful trial this year, DT has started implementing RAN Guardian across its network. The TM Forum has already started a movement towards autonomous networks and it has defined different levels of autonomy. So, agentic AI looks like a promising way to automate networks, but more investigation is still required to understand the limits of this technology.

In my coding activities, for example, I often use LLMs for boilerplate components. However, for complex problems or coding that resides deep in a library, even the best LLMs fail. It is important to understand failure modes if we are to apply these new technologies correctly and to figure out what guardrails are necessary to prevent adverse behaviours. Let me give you an example. I am a private pilot, so I set up an agent to monitor weather conditions ten-days ahead to help me look out for opportunities to go flying. On an afternoon that the agent reported as good for flying, I double checked the conditions via other sources and noted that they were poor. When I questioned the agent why it had ignored the wind speed limits I had specified, it returned an “I am sorry for getting it wrong” message. If we at DT and other service providers are to bring Agentic AI into our environments, it is important to understand their functions and their performance limits.

Q: Are there any other insights you have gained from your work to date?

IF: Yes, there are a couple of points I would like to share. Firstly, the pace at which A2A and MCP protocols have developed is amazing. You need only search for MCP servers to see how the major AI and Hyperscalers are readying for this segment of the market. A key factor has been their developer outreach initiatives and the software they make available to developers. This is a lesson for oneM2M; there is a need to make open-source tools, such as ACME CSE, available and easily accessible. I think that the oneM2M developer tools and recipes also help.

A second observation is about the flexibility in MCP. When an Agent needs to execute a task, it queries an MCP server to check what tools area available to access. A system manager can change that list of tools at any time. This means that a tool’s functionality can change over time. It also means that an agent might autonomously choose a different tool to complete a subsequent task.

A final puzzling observation relates to the cost of using MCP servers. All agent and LLM activities are tariffed in terms of tokens. When an Agent invokes an LLM to process a user instruction, that takes up a certain number of tokens. When an Agent queries an MCP server to find out what tools are available, that also consumes a different number of tokens. In my experiments, I noticed that a Google agent requests a list of available tools, completes its operation, and then asks for the list of available tools again. This seems costly, both in terms of task-completion overhead and consumption of tokens. As we progress with our investigations and standardization in oneM2M, I hope to gain more insight on this procedural arrangement.